- Investment

- 科学论坛

-

Program

- Recruitment

- Center

News Message

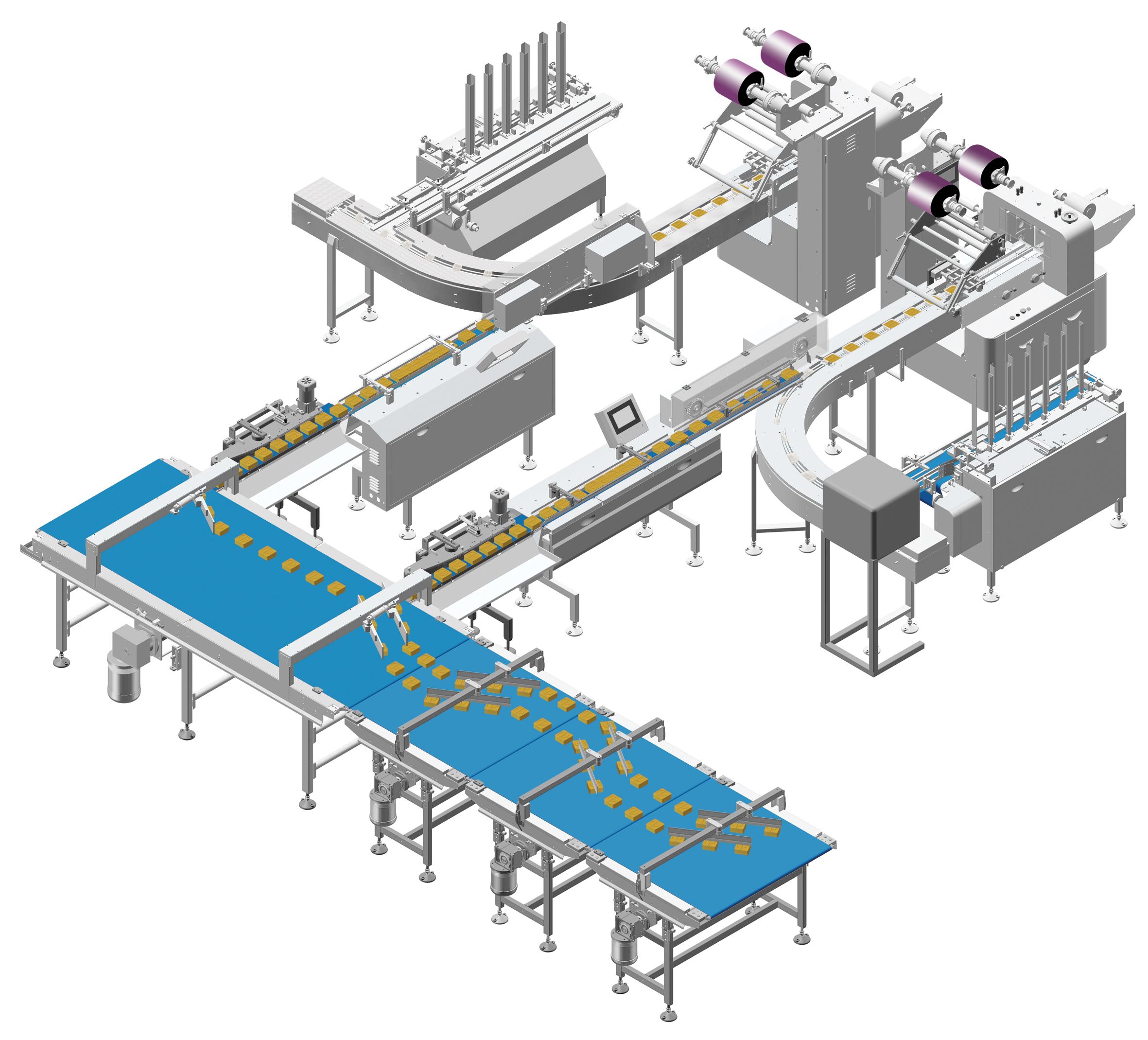

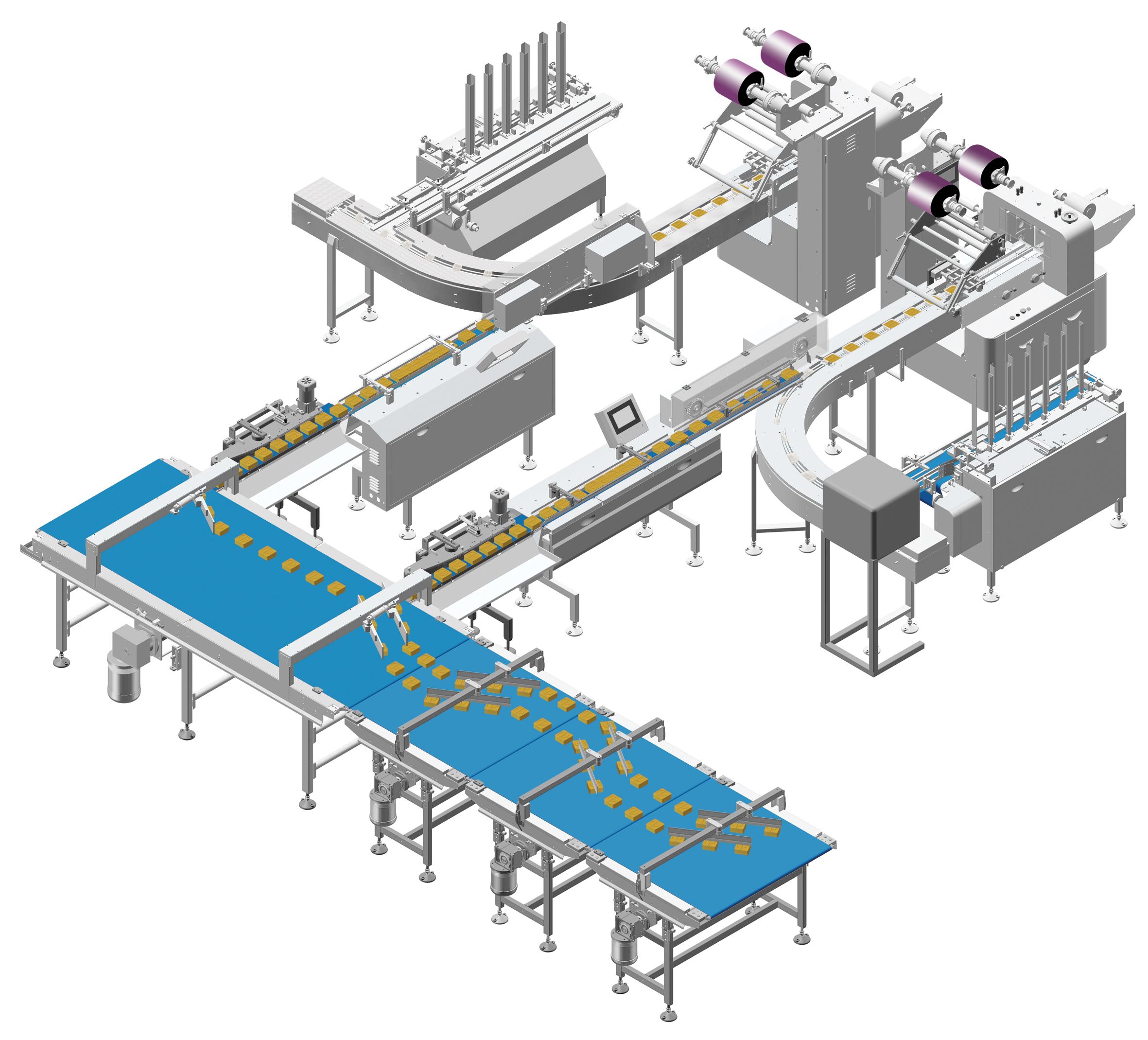

Why Smart Manufacturing

- by wittx 2019-04-25

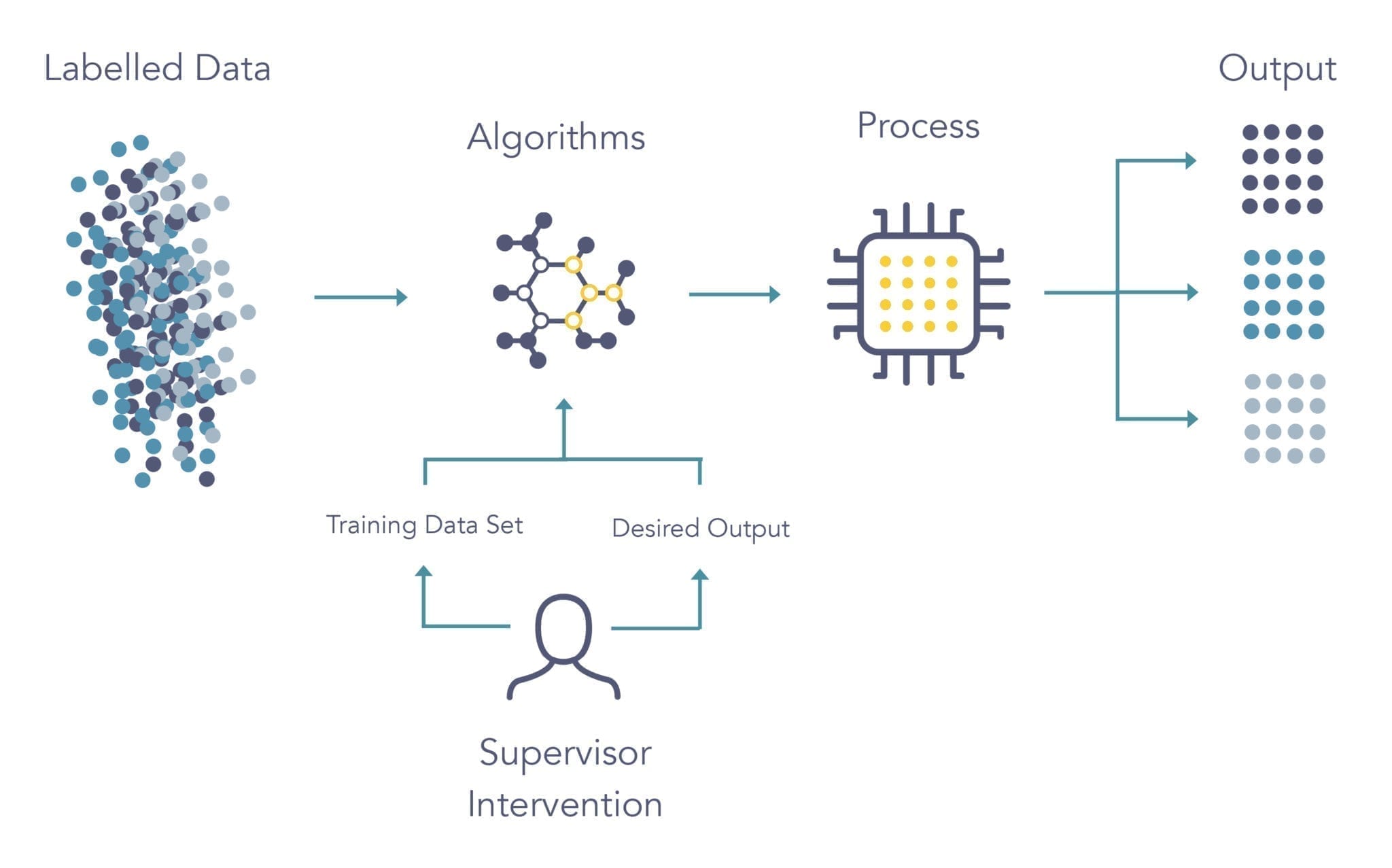

Since 2017, AI researchers have been using AI neural networks to help designbetter and faster AI neural networks. Applying AI in pursuit of better AI has, to date, been a largely academic pursuit—mainly because this approach requires tens of thousands of GPU hours. If that’s what it takes, it’s likely quicker and simpler to design real-world AI applications with the fallible guidance of educated guesswork.

Next month, however, a team of MIT researchers will be presenting a so-called “Proxyless neural architecture search” algorithm that can speed up the AI-optimized AI design process by 240 times or more. That would put faster and more accurate AI within practical reach for a broad class of image recognition algorithms and other related applications.

“There are all kinds of tradeoffs between model size, inference latency, accuracy, and model capacity,” says Song Han, assistant professor of electrical engineering and computer science at MIT. Han adds that:

“[These] all add up to a giant design space. Previously people had designed neural networks based on heuristics. Neural architecture search tried to free this labor intensive, human heuristic-based exploration [by turning it] into a learning-based, AI-based design space exploration. Just like AI can [learn to] play a Go game, AI can [learn how to] design a neural network.”

And just like AI programs that have been victorious at Go and chess have taught grandmasters of those games new strategies, the AI-optimized AI results have provided new approaches to AI neural network design.

The type of AI neural network whose development the new MIT algorithm speeds up is called a convolutional neural network (CNN). CNNs are typically the go-to neural nets for image recognition programs. CNNs’ applications beyond images and video include natural language processing and drug discovery.

MIT’s Han notes that once his group’s algorithm sets up its optimal CNN, the resulting system will likely classify images 1.8 times as fast as the current industry standard.

Han says three big ideas contributed to the team’s ability to pinpoint optimal CNN designs at such blazing speeds.

For one, they reduced the memory load on GPUs running a neural architecture search. A standard neural architecture search might examine all possible connections between neural layers in the network simultaneously. Han’s group instead saved in the GPU’s memory just one path at a time. This trick, which enables a full search of the parameter space while using one-tenth the amount of space in memory, allowed their search to cover more network configurations without running out of room on the chip.

The second trick up their sleeve, pruning entire paths from the neural net search that have been discarded, markedly sped up the neural network search process.

A third innovation involved making the neural net search aware of the latency times of each type of hardware the AI system might be running on—whether straight CPUs or GPU-accelerated systems to mobile platforms.

One surprise, Han says, was that conventional wisdom has been limited about some kinds of image recognition neural nets.

CNNs use filters in their image recognition algorithms that are square grids comprising 3-by-3, 5-by-5, or 7-by-7 pixels. Conventionally, 7x7-size filters are rarely used, because the thinking has been that it’s faster to run more layers of 3x3 filters than a single 7x7 filter.

However, Han says, the AI-optimized AI used a fair number of 7x7 filters—something Han attributes to the predominance of GPUs in most AI calculations today.

“It found 7x7 layers are easier on a GPU because a GPU has large parallelism,” Han says. “And invoking a large kernel call is more efficient than invoking several small kernel calls.”

Of his team’s algorithm, Han says “It provides good feedback for human engineers to design future neural networks,” It’s not, however, meant to enable AIs to build more powerful versions of themselves. (Those who fear the AI apocalypse may not have much to work with in the current research.)

This story was updated on 4 April 2019.

Share Http URL: http://www.wittx.cn/get_news_message.do?new_id=189

Best Last Month

Information industry by wittx

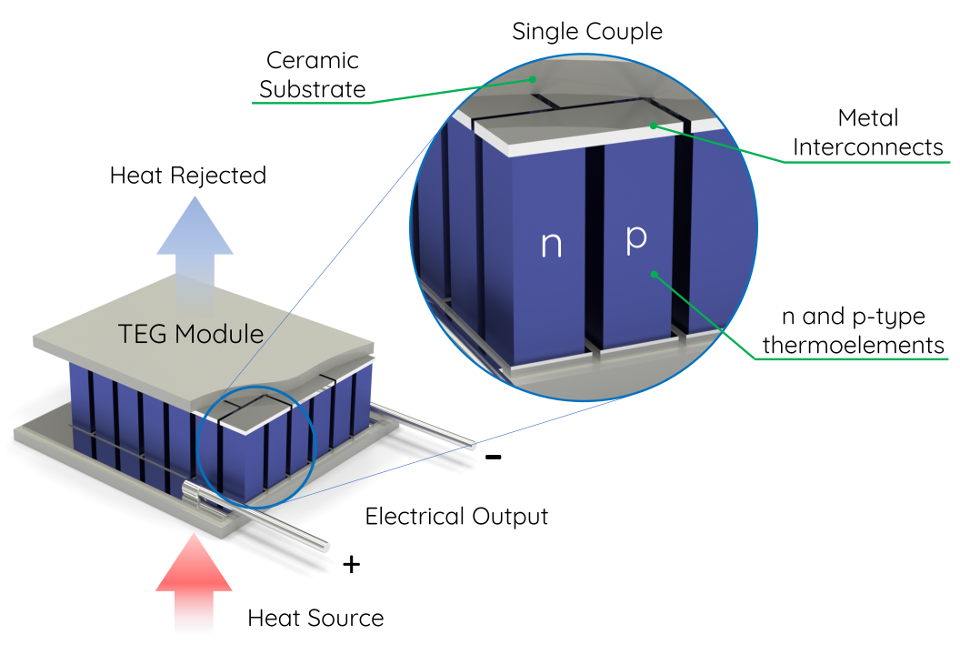

Information industry by wittxThree dimensional architected thermoelectric devices with high toughness and power conversion effici

Information industry by wittx

Information industry by wittx

Information industry by wittx

Mechanical electromechanical by wittx

Electronic electrician by wittx

Information industry by wittx

Information industry by show

Information industry by wittx